Overview

The team’s research and development into new imaging technologies for the mining industries can sometimes translate well for alternative applications. For a number of years, the team has been developing a sensor fusion technology based on its patented method (PCT WO2006024091).

Passive dense stereo-photogrammetry sensors typically display high precision, high and point density. However, they can also involve relatively slow data processing times, and poor performance if image texture is lacking.

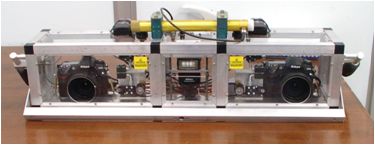

Examples of passive stereo-vision sensing. CSIRO developed stereo camera for the mining industry (left) and commercialised through DataMine (right)

Fused sensors

Active sensors, such as flash LIDAR, can quickly generate 3D point clouds but generate sparse point clouds and have relatively low precision/accuracy.

Referred to as Stereo-depth fusion (or SDF), the CSIRO technology integrates a stereo vision system with prior knowledge of the scene, such as provided via a laser ranging system, to benefit from the advantages of each technology. The result is a software and hardware system capable of achieving high fidelity dense stereo matching at very high frame rates. The applications are numerous and any scenario where short range 3D sensing is required, or alternatively where prior knowledge of the scene is available, is well suited.

The team has designed SDF systems for both mining and manufacturing environments where the applications benefit from more robust stereo matching and higher frame rates. An ongoing collaboration with Boeing is further demonstrating the benefits of this approach.

|

|

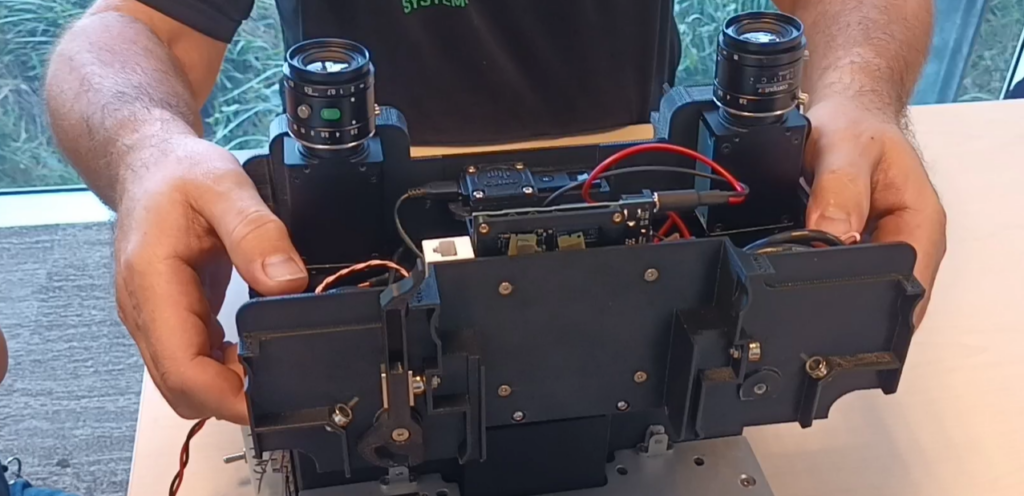

left: CSIRO prototype SDF sensor consisting of two machine vision cameras and a flash LIDAR. right: CSIRO testing laboratory consisting of multiple sensors.

The team has also been collaborating closely with the Workspace Team in CSIRO Data61 in the deployment of this technology in their Mixed reality Lab.

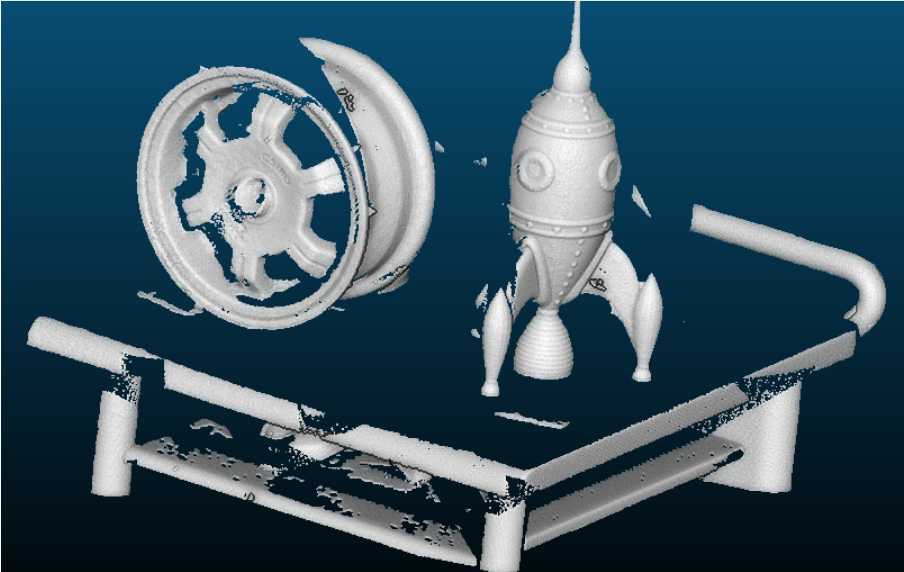

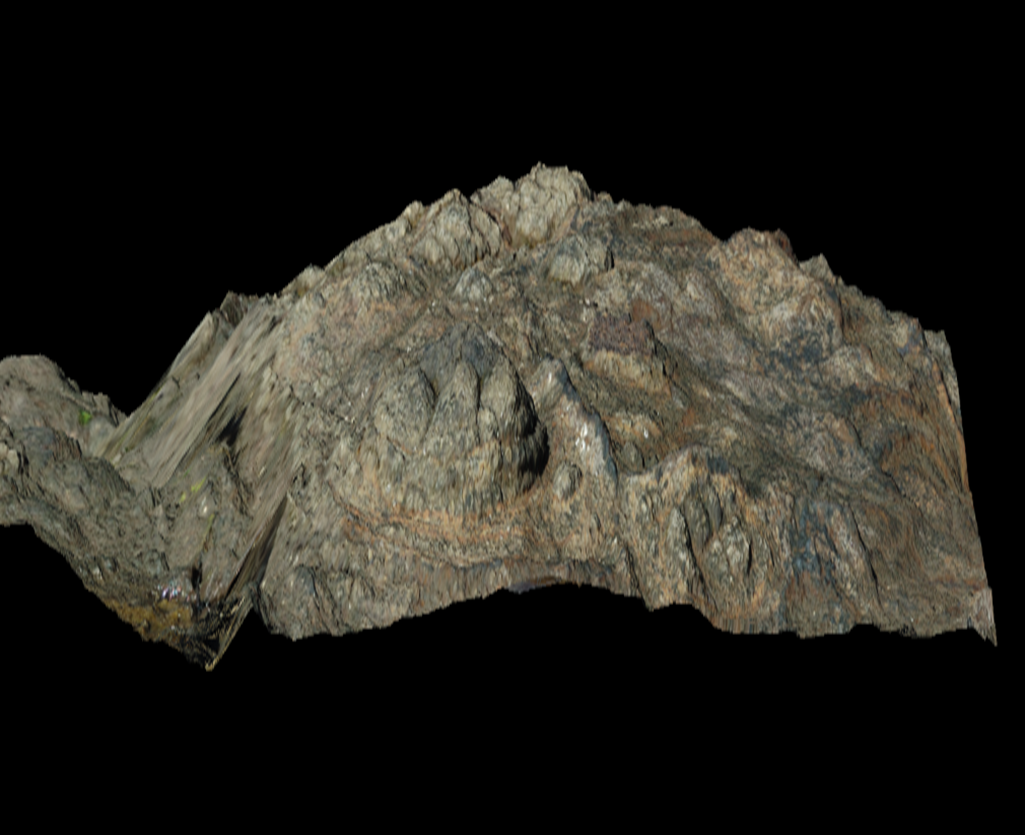

Example 3D model built with CSIRO 3D Stereo-depth fusion technology at the CSIRO Mixed Reality Lab.

Mobile sensing applications

Another interesting project underway is the Multi-Resolution Scanner project. Funded by the Space Technology Future Science Platform, this work which is being conducted in collaboration with Data61 and with support from Boeing, NASA and ISS National Lab, will develop a sensor payload for deployment for various space based applications, including interior mapping of space vehicles such as the International Space Station. Note that applications for this compact, fused sensor payload are currently being investigated in terrestrial machine vision contexts such as mining, manufacturing and civil engineering.

Example of the sensor package being developed for space based applications, fusing stereo-vision and time-of-flight sensing modalities.

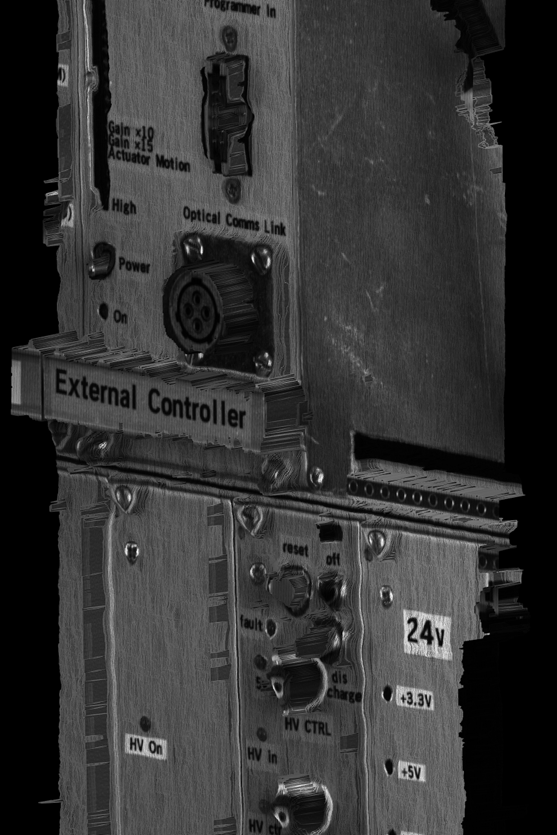

Example 3D models built with CSIRO 3D Stereo-depth fusion technology (1) the QCAT Lab control panel (2) in the field showing a rock outcrop of around 1m x 1m in size