Visual Processing for the Bionic Eye

In 2004, there were 50,000 people legally blind in Australia, with numbers expected to increase to 87,000 by 2024 with the ageing population (“Eye Research Australia Insight: The Economic Impact and Cost of Vision Loss in Australia” by Centre for Eye Research Australia).

Although there are many useful devices on the market to assist individuals with vision impairment, there is a lack of the type of sensor-based assistant systems that are newly appearing in cars (.e.g, lane departure, collision warning, navigation).

This project aims to develop a new generation of assistive devices (stand-alone wearable devices that individuals can use without medical intervention) based on computer vision processing: it will produce prototype devices that aim to demonstrate effective assistance for individuals with vision impairment.

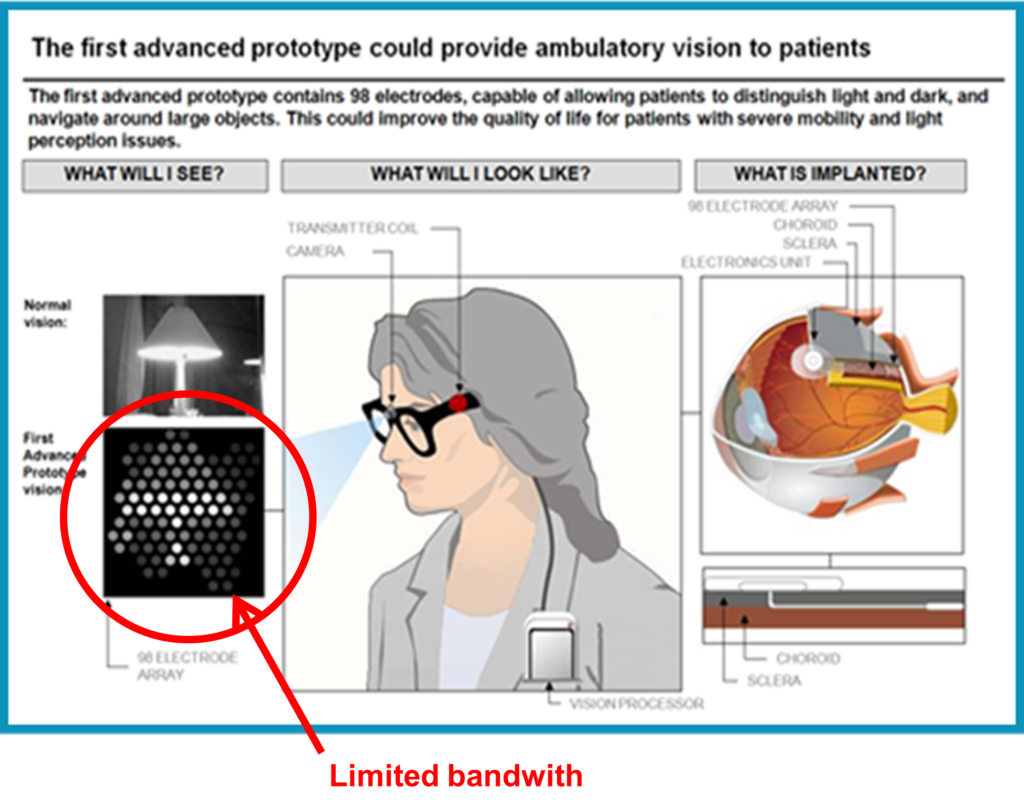

Through Nick Barnes, a lead investigator, VIBE is part of Bionic Vision Australia, which is a Strategic Research Initiative funded by the Australian Research Council, 2010-2014 at $50M. The Bionic Vision Australia partnership aims to build the first Australian bionic eye implant whereby individuals may recover some of the lost vision via electrical stimulation of the retina. Vision processing will be one of the key components of a bionic eye as it will enable efficient encoding of high resolution images into a set of stimulation signals on a retinal implant. Nick Barnes is also a lead investigator in NHMRC Project Grant 1082358, 2015-2018 along with partners the Centre for Eye Research Australia and the Bionics Institute which will include a patient trial with patients to be implanted with a retinal implant.

Research Outcomes

Commencing 2012, three patients were implanted with a 20 electrode retinal implant. During the trial we investigated the effectiveness of a number of vision processing techniques that we developed for prosthetic vision. They were run as software which took images from a head worn camera, and converted these to electrical stimulation on the device.

In human implanted studies with three patients, we have showed, for the first time that a vision processing method that is suitable for a wide variety of tasks can improve the results for low vision tests (Conference Abstract 1).

We have shown that using scene understanding techniques in vision processing can improve the results in simulated prosthetic vision with normally-sighted participants using a prosthetic vision simulator (Journal Article 1). We have also tested scene understanding techniques in implanted patient trials (http://www.smh.com.au/technology/sci-tech/bionic-eye-trial-really-promising-20140430-zr1zh.html).

We have contributed to showing the effectiveness of the device overall (Journal Article 3).

Who will benefit?

The Bionic Eye project targets two common retinal diseases:

(L-R) Image 1: Normal Vision Image 2: Aged macular degeneration (prevalence 1 in 5000) Image 3: Retinitis Pigmentosa (leading cause of blindness in developing countries)

(L-R) Image 1: Normal Vision Image 2: Aged macular degeneration (prevalence 1 in 5000) Image 3: Retinitis Pigmentosa (leading cause of blindness in developing countries)

The Bionic Vision Australia solution

Developing the first Australian retinal implant.

Problem

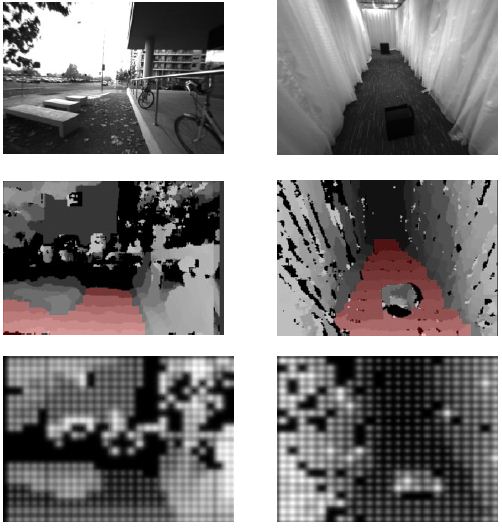

Perceiving trip hazards is critical for safe mobility and an important ability for prosthetic vision. We take the approach of creating a visual representation that augments the appearance of obstacle in the environment.

Approach

- Trip hazards not marked by abrupt intensity change can be difficult to perceive using standard prosthetic vision scene representations.

- Current segmentation algorithms do not guarantee preservation of low contrast surface boundaries.

We contribute:

- an algorithm to estimate traversable space (and its boundaries) using binocular images

- a system for highlighting trip hazards in prosthetic vision.

From ground plane segmentation to augmented depth phosphene images.

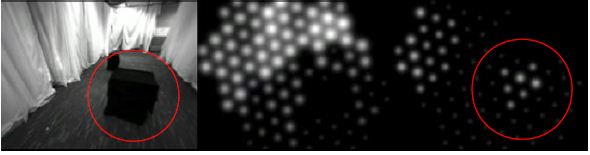

Augmented depth vs intensity based on 98 phosphenes (based on BVA’s first generation 98 electrode implant)(L-R) Image 1: original Image 2: intensity based Image 3: augmented depth

Related publications

Journal Articles

- Mobility and low contrast trip hazard avoidance using augmented depth.”, McCarthy C, Walker JG, Lieby P, Scott AS, Barnes N, Journal of Neural Engineering, 12(1), Feb 2015.

- “The feasibility of coin motors for use in a vibrotactile display for the blind.”, Stronks HC, Barnes N, Parker D, Walker JG, Artificial Organs, accepted Sept 2014.

- “First-in-Human Trial of a Novel Suprachoroidal Retinal Prosthesis”, L N Ayton, P J Blamey, R H Guymer, C D Luu, D A X Nayagam, N C Sinclair, M N Shivdasani, J Yeoh, M F McCombe, R J Briggs, N L Opie, J Villalobos, P N Dimitrov, M Varsamidis, M A Petoe, C D McCarthy, J G Walker, N Barnes, A N Burkitt, C E Williams, R K Shepherd, P J Allen, for the Bionic Vision Australia Research Consortium, PLOSONE, Dec 18, 2014, DOI: 10.1371/journal.pone.0115239.

- “A new theoretical approach to improving face recognition in disorders of central vision: Face caricaturing.”, Irons J, McKone E, Dumbleton R, Barnes N, He X, Provis J, Ivanovici C, Kwa A., Journal of Vision, 14(2), Feb 17, 2014.

- “The Role of Computer Vision in Prosthetic Vision”, N Barnes, Image and Vision Computing, 20, 478-439, 2012.

- “Estimating Relative Camera Motion from the Antipodal-Epipolar Constraint”, J Lim, N Barnes, H Li, IEEE Trans Pattern Analysis and Machine Intelligence, 32(10), Oct 2010, pp 1907-1914.

- “Estimation of the Epipole Using Optical Flow at Antipodal Points”, J Lim and N Barnes, Computer Vision and Image Understanding, 114(2), Special issue on Omnidirectional Vision, Camera Networks and Non-conventional Cameras, pp 245-253, Feb, 2010.

Fully Refereed full length conference papers

- “Importance Weighted Image Enhancement for Prosthetic Vision: An Augmentation Framework”, by C McCarthy, and N Barnes, in Int Symp on Mixed and Augmented Reality, ISMAR’14, Sept, 2014.

- “Large-Scale Semantic Co-Labeling of Image Sets”, by J M Alvarez, M Salzmann, and N Barnes, in Winter Applications of Computer Vision (IEEE-WACV), March, 2014.

- “Exploiting Sparsity for Real Time Video Labelling”, by L Horne, J M Alvarez, and N Barnes, in Computer Vision Technology : from Earth to Mars, Workshop at the International Conference on Computer Vision, Sydney, Tasmania, Australia, Dec, 2013. (Best Paper)

- “Learning Structured Hough Voting for Joint Object Detection and Occlusion Reasoning”, by T Wang, X He and N Barnes, in Proc IEEE-CVPR, Portland Oregon, USA, June, 2013

- “Glass object segmentation by label transfer on joint depth and appearance manifold”, by T Wang, X He and N Barnes, in Proc ICIP, Melbourne Australia, 2013

- “An overview of vision processing approaches in implantable prosthetic vision”, by N Barnes, in Proc ICIP, Melbourne Australia, 2013

- “Augmenting Intensity to enhance scene structure in prosthetic vision”, by C McCarthy, D Feng, N Barnes, in Proc Workshop Multimodal and Alternative Perception for Visually Impaired People, San Jose, USA, July, 2013. (Best Paper)

- “The Role of Vision Processing in Prosthetic Vision”, by N Barnes, X He, C McCarthy, L Horne, J Kim, A F Scott, and P Lieby, in Proc IEEE EMBC, August, 2012

- “Time-To-Contact Maps for Navigation with a Low Resolution Visual Prosthesis”, by C McCarthy and N Barnes, in Proc IEEE EMBC, August, 2012

- “Image Segmentation for Enhancing Symbol Recognition in Prosthetic Vision”, by L Horne, N Barnes, C McCarthy, X He, in Proc IEEE EMBC, August, 2012

- “On Just Noticeable Difference for Bionic Eye”, Yi Li, C McCarthy, and N Barnes, in Proc IEEE EMBC, August, 2012

- “Text Image Processing for Visual Prostheses”, by S Wang, Yi Li and N Barnes, in Proc IEEE EMBC, August, 2012

- “An Face-Based Visual Fixation System for Prosthetic Vision”, by X He, J Kim, and N Barnes, in Proc IEEE EMBC, August, 2012

- “Phosphene Vision of Depth and Boundary from Segmentation-Based Associative MRFs”, by Y Xie, N Liu, and N Barnes, in Proc IEEE EMBC, August, 2012

- Ground surface segmentation for navigation with a visual prosthesis, C. McCarthy, N. Barnes and P. Lieby, 2011 IEEE Conference on Engineering in Medicine nd Biology (EMBC 2011).

- “Substituting Depth for Intensity and Real-Time Phosphene Rendering: Visual Navigation under Low Vision Conditions”, by P Lieby, N Barnes, C McCarthy, N Liu, H Dennet, J Walker, V Botea, and A Scott, in Proc 33rd Annual International IEEE Engineering in Medicine and Biology Society Conference, (IEEE-EMBS), Boston, USA, Aug 2011

- Surface extraction from iso-disparity contours, C. McCarthy, and N. Barnes, 2010 Asian Conference on Computer Vision (ACCV 2010)

Conference abstracts

- “Lanczos2 Image Filtering Improves Performance on Low Vision Tesets in Implanted Visual Prosthetic Patients”, by N. Barnes, A. F. Scott, A. Stacey, P. Lieby, M. Petoe, L. Ayton, M. Shivdasini, N. Sinclair, J G. Walker, Proceedings of the Association for Research in Vision and Opthamalmology annual meeting (ARVO 2014), Orlando, Florida, USA, May, 2014

- “Caricaturing improves face recognition in simulated age-related macular degeneration”, Elinor McKone, Jessica Irons, Xuming He, Nick Barnes, Jan Provis, Rachael Dumbleton, Callin Ivanovici, Alisa Kwa, VSS, 2013

- “Evaluating Lanczos2 image filtering for visual acuity in simluated prosthetic vision”, by P. Lieby, N. Barnes, J G. Walker, A F. Scott, N. Barnes and L. Ayton Proceedings of the Association for Research in Vision and Opthamalmology annual meeting (ARVO 2012), Seatle, USA, May, 2013

- “Low contrast trip hazard avoidance with simulated prosthetic vision”, by C. McCarthy, P. Lieby, J G. Walker, A F. Scott, V. Botea and N. Barnes, Proceedings of the Association for Research in Vision and Opthamalmology annual meeting (ARVO 2012), Fort Lauderdale, FL USA, 2012. (oral presentation)

- “Evaluating Depth-based Visual Representations For Mobility In Simulated Prosthetic Vision”, by N. Barnes, P. Lieby, J G. Walker, C. McCarthy, V. Botea and A F. Scott, Proceedings of the Association for Research in Vision and Opthamalmology annual meeting (ARVO 2012), Fort Lauderdale, FL USA, 2012

- “Mobility Experiments Using Simulated Prosthetic Vision With 98 Phosphenes Of Limited Dynamic Range”, by P. Lieby, N. Barnes, C. McCarthy, V Botea, A F. Scott and J G. Walker, Proceedings of the Association for Research in Vision and Opthamalmology annual meeting (ARVO 2012), Fort Lauderdale, FL USA, 2012

- “Orientation and mobility considerations in Bionic Eye Research”, by Lauren Ayton, Sharon Haymes, Jill Keeffe, Chi Luu, Nick Barnes, Paulette Lieby, Janine G. Walker, Robyn Guymer, 14th International Mobility Conference, Palmerston North, New Zealand, Feb, 2012

- Mobility Experiments with simulated vision and sensory substitution of depth, N Barnes P Lieby, H Dennet, C McCarthy, N Liu, J G Walker, ARVO, 2011

- Face detection and tracking in video to facilitate face recognition with a visual prosthesis, Xuming He, Chunhua Shen, N Barnes, ARVO, 2011

- Investigating the role of single-viewpoint depth data in visually-guided mobility, N Barnes P Lieby, H Dennet, J G Walker, C McCarthy, N Liu, Yi Li, VSS, 2011.

11 “Object detection for bionic vision”, by L Horne, N Barnes, X He and C McCarthy, 2nd Int Conf on Medical Bionics: Neural Interfaces for Damaged Nerves, Phillip Island, Vic, Australia, Nov, 2011 - “Automatic Face Zooming and Its Stability Analysis on a Phosphene Display”, by J Kim, X He and C McCarthy, 2nd Int Conf on Medical Bionics: Neural Interfaces for Damaged Nerves, Phillip Island, Vic, Australia, Nov, 2011

- “The impact of environment complexity on mobility performance for prosthetic vision using the visual representation of depth”, by J G Walker, N Barnes, P Lieby, C McCarthy and H Dennet, 43rd Ann Sci Congress of the Royal Australian and New Zealand College of Ophthalmologists – Sharing the Vision, Canberra, ACT, Australia, Nov, 2011

- Expectations of a visual prosthesis: perspectives from people with impaired vision, by J E Keeffe, K L Francis, C D Luu, N Barnes, E L Lamoureaux, R H Guymer, ARVO, 2010