Making Machine Learning Transparent

“Making ‘black-box’ machine learning easily understandable and useable by domain experts in order to make high quality decisions confidently.”

“Be informed and be involved in ML-based decision making.”

ML research field has a frequent lack of connection between ML research and real-world impact because of complexity of ML models. For instance, for a domain expert who may not have expertise in ML or programming, an ML algorithm is as a “black-box”, where the user defines parameters and input data for the “black-box” and gets output from its running. It is difficult for users to understand the complicated ML models, such as what is going on inside ML models and how to accomplish with the learning problem. As a result, users are uncertain for ML results and this affects the effectiveness of ML methods. Data61’s research concerns the transparency and feedback in ML in order to:

- Make ML models more understandable

- Make ML processes more transparent

- Make ML outputs more meaningful

- Make ML techniques more accessible

- Make ML-based decision making measurable

Benefits

Making ML transparent research will help to formulate guidelines/standards for the user interaction design of ML-based intelligent applications. As a result, ML results from transparent ML will help end users make high quality decisions confidently.

Scientific approach

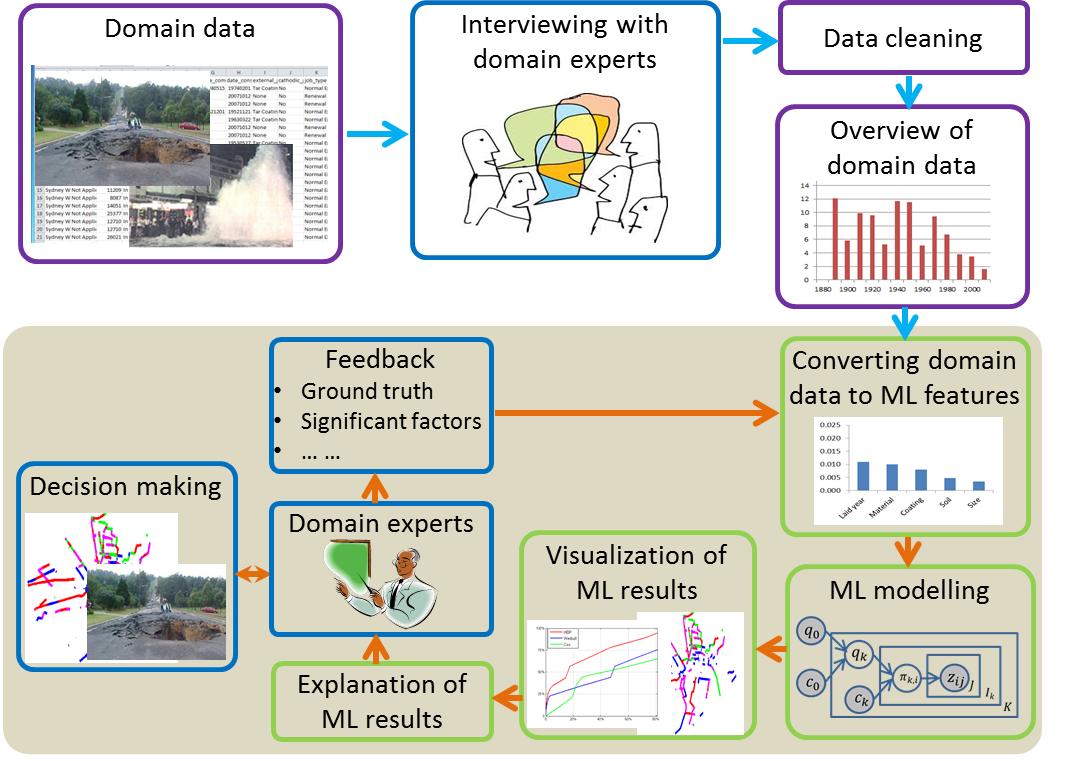

Data61’s research focuses on making the ML process understandable and usable by end users through evaluating end users’ experiences using HCI techniques. This includes following steps:

- Revealing internal real-time status update of ML models with meaningful presentations.

- Adaptive measurable decision making by varying ML-based decision factors.

- Effects of uncertainty and correlation on user’s confidence in ML-based decision making.

With Data61’s approach, ML models are evaluated based on decision quality instead of ML results directly, which is more acceptable by both ML researchers and domain experts.

Previous work in the field

This project builds on Data61’s long vision in ML, and relates to a number of other projects undertaken by our research team, including:

- Intelligent Pipes: advanced condition assessment and failure prediction;

- Intelligent Transport Systems (ITS): travel time prediction, incident prediction, correlation between travel time and incident, etc.;

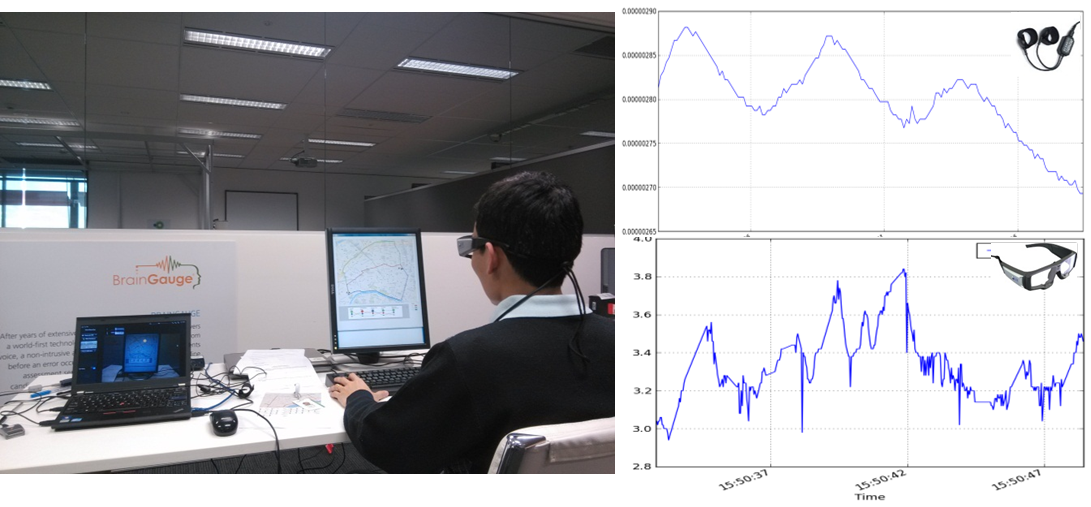

- Driver Mental State Monitoring (DMSM): understanding and predicting driver’s mental state, including cognitive load and emotion, how they relate to driving performance and user experiences of car interface;

- Human Computer Interaction (HCI): study, planning, and design of the interaction between human and computers;

- Cognitive Load Measurement (CLM): robust multimodal cognitive load measurement.

People: Fang Chen (Technical contact), Jianlong Zhou, Constant Bridon, Yang Wang, Ronnie Taib, Ahmad Khawaji, Zhidong Li